Introduction

Education is at a turning point. The rise of artificial intelligence (AI) has sparked debate in classrooms, offices, and boardrooms alike. For educators and architects designing learning environments, the challenge is clear: how can AI be integrated in ways that support—not replace—the human-centered process of teaching and learning?

The answer lies in balance. Technology must follow pedagogy, not the other way around. This paper explores the evolving role of AI in education, highlights its benefits and risks, and outlines strategies to make learning more equitable and future-ready.

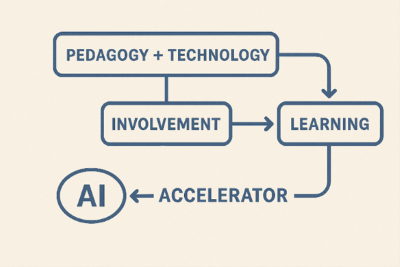

From Involved Learning to AI-Enhanced Learning

Modern education has moved far beyond passive teaching. Models like collaborative, active, and problem-based learning emphasize engagement and participation. AI extends this evolution by offering tools that personalize instruction, support diverse learners, and strengthen the teacher’s role as a guide.

Practical applications already exist: designing individualized education plans for neurodiverse students, creating lesson materials that adapt to different reading levels, and acting as a digital tutor to reinforce critical thinking and problem-solving.

Rather than undermining instruction, these uses highlight how AI can deepen involvement and equity in learning.

Higher Education and AI PCs

Colleges and universities are navigating an inflection point. AI-enabled devices—equipped with neural processing units that handle AI tasks locally—signal a move beyond cloud dependence.

The benefits are significant: immediate processing for real-time translation, transcription, and editing; stronger privacy for sensitive research and student data; and direct, hands-on experience with advanced AI tools that prepare students for AI-driven workplaces.

For higher education leaders, the modernization of campus technology is no longer optional. Aligning infrastructure with institutional goals ensures that investments in AI strengthen both academic outcomes and digital literacy.

Managing the Risks

The “dark side” of AI is real. Plagiarism, academic dishonesty, and overreliance on generative tools challenge the integrity of learning. At the same time, confusion about when AI use is acceptable undermines both students and faculty.

To address these concerns, institutions are beginning to experiment with clearer, more structured policies. Some schools have adopted a “traffic light” system: red signals no AI use allowed, yellow permits limited uses such as outlining, and green indicates full flexibility. This approach provides transparency for both students and instructors while reinforcing expectations around academic integrity.

Others are rethinking assessment formats altogether. For example, instead of relying solely on written essays—where plagiarism is harder to detect—some instructors incorporating video-based reports, oral defenses, or interactive presentations. These formats not only reduce the likelihood of AI misuse but also strengthen students’ communication and critical thinking skills in ways that traditional essays often cannot.

Together, these strategies illustrate that managing the risks of AI is less about banning the technology and more about designing assignments and policies that preserve authenticity while still encouraging exploration. The path forward requires institutions to define ethical use, model responsible practices, and integrate digital literacy into curricula so that AI remains an ally rather than an adversary.

Equitable Outcomes and Neurodiverse Learners

AI’s greatest promise lies in its ability to meet learners where they are, particularly students who process and engage with information differently. For neurodiverse learners—those with ADHD, autism spectrum conditions, dyslexia, or other cognitive variations—AI tools can transform barriers into bridges.

For example, text simplification tools can reframe dense passages into accessible language, allowing students with dyslexia to better grasp key concepts without losing meaning. Similarly, text-to-speech platforms and multimodal resources provide students on the autism spectrum with flexible ways of engaging—whether through auditory cues, visual simulations, or interactive VR lessons. In behavioral contexts, predictive AI models can assist teachers by signaling when a student may be on the verge of disengagement, enabling timely and compassionate intervention before escalation occurs.

These applications illustrate that AI is not merely a supplement, but a pathway toward equity. By offering personalization and adaptability at scale, it allows teachers to create classroom environments where neurodiverse students are not just accommodated but actively supported in reaching their full potential.

Ethical Considerations and the Path Ahead

As AI becomes embedded in education, its promise comes with weighty responsibilities. The integration of intelligent systems into classrooms, curricula, and assessments raises questions that educators, administrators, and policymakers cannot afford to overlook. Chief among these are issues of equity, transparency, data protection, and responsible use.

Responsibilities for Educators and Institutions

Educators bear the responsibility of ensuring that AI tools amplify, rather than diminish, human teaching. This includes modeling ethical use for students, setting clear boundaries for acceptable AI applications, and teaching critical AI literacy so that learners understand both its power and its limitations. Institutions, meanwhile, must establish governance frameworks that define how AI will be used, how data will be collected and stored, and what safeguards are in place to prevent misuse.

Safeguarding Student Privacy and Data

One of the most pressing ethical concerns is the protection of student data. AI platforms often rely on collecting and analyzing personal information—ranging from academic performance to biometric feedback. Institutions must commit to minimizing data collection, anonymizing records whenever possible, and ensuring compliance with regulations such as FERPA and GDPR. Beyond compliance, schools should adopt a principle of data dignity, recognizing that students own their learning data and have a right to transparency in how it is used.

Equitable Access and Avoiding Bias

Another critical responsibility lies in equity. AI systems are only as unbiased as the data used to train them. If left unchecked, algorithms can reinforce stereotypes or exclude marginalized groups. To address this, institutions must adopt multi-layered review processes, regularly auditing AI tools for unintended bias. They should also provide equitable access by ensuring that students from under-resourced schools or communities have the same opportunities to engage with AI as their peers in well-funded institutions. Subsidized access programs, shared resource centers, and targeted training for teachers in high-need districts can help narrow this gap.

A Framework for Safe and Equitable Use

Moving forward, a practical framework for ethical integration of AI in education should include:

-

-

- Transparency: Clear communication with students and families about how AI is used and what data is collected.

- Accountability: Systems for educators and administrators to review AI-driven outcomes, challenge results, and intervene when tools fail.

- Equity: Policies that ensure AI enhances learning opportunities for all students, not just those with access to advanced technology.

- Professional Development: Ongoing training for educators so they can confidently and critically guide students in AI use.

- Continuous Oversight: Independent review boards at the institutional or state level to evaluate AI tools and their long-term impacts.

-

Conclusion

AI in education is not a passing trend. It is a permanent, transformative force that will shape how students learn, how educators teach, and how institutions design spaces for the future. For architects, this means reimagining classrooms as adaptive, flexible environments. For educators, it means embracing AI as an ally rather than an adversary.

The opportunity is clear: use AI to enhance equity, engagement, and authenticity in education—while keeping human judgment, creativity, and ethics at the center.