The terminology used to describe AI systems is currently in a state of flux. “AI” is often used so broadly that it becomes meaningless. In response, there is a tendency to use very specific terms, such as “large language model chatbot” which can be helpful in some contexts but can also be messy and difficult to understand. The middle ground between these two extremes involves using more precise terms and being aware of the appropriate context. Certain terms are bound to show up in your advanced AI conversations. While the terminology of AI is constantly evolving, these specific technical terms are being used today to describe the building blocks of AI.

Generative Image Platforms

This term refers to AI making art. Visual generative AI systems are trained on sets of images with text captions. You can access these image creation engines, also referred to as Synthetic media, through web portals. Type in descriptive text to generate images and revise the images with further text commands. The two most popular image platforms are DALLE2 and Midjourney. Be aware that copyright issues arise from generative AI platforms, including ownership of the output, and infringement considerations.

Neural Style Transfer (NST)

The term is used to describe the technique of blending the style from one image into another image while keeping the content intact. When the term is used in reference to generative image platforms, it refers to the class of software algorithms that tap into deep neural networks to manipulate digital images, or videos, to adopt the appearance or visual style of another image.

Meta Prompt

Meta-Prompt is a simple self-improving language “agent” that reflects on interactions with a user and modifies its own instructions based on those reflections. The only thing that is fixed about the agent is the meta-prompt, which is an instruction for how to improve its own instructions. For example, a customer service chatbot engages in a conversation with a user who may make a request, give instructions, or share feedback. At the end of the interaction, a meta-prompt that would direct the chatbot to evaluate its performance as an assistant might be: What could be revised in the process to satisfy the user in fewer interactions? The results of that meta-prompt would then be incorporated into the chatbot’s instructions. The only fixed prompt is the one that governs the revision of the instructions. There is no memory, but the agent can self-improve by incorporating useful details about previous interactions into its instructions.

Retrieval Augmented Generation (RAG) or Grounding

Grounding is the process of using large language models (LLMs) with information that is use-case specific and relevant but not available as part of the LLM’s trained knowledge. It is used to ensure the quality, accuracy, and relevance of the generated output. While LLMs come with a vast amount of knowledge already, that type of knowledge is not tailored to specific use cases. To obtain accurate and relevant output, companies like Microsoft provide LLMs with relevant information (database, application data, etc.). In other words, they “ground” the models in the context of their customer’s specific use cases.

Semantic Search

Semantic search is the primary technique for Retrieval Augmented Generation (RAG). The process involves indexing documents or fragments of documents based on their semantic representation (meaning or logic) using embeddings.

Embedding refers to the process of inserting one sentence into another sentence, putting questions into affirmative sentences, and changing word order. For example, in the question, “I wonder if you could tell me what time it is?”, the embedded question is “what time is it?” In AI, embeddings make it easier to apply machine learning on large inputs representing words. Embedding captures some of the meaning of the input by placing similar meanings close together in the embedding space. Embedding can be learned and reused across models.

During retrieval time, a similarity search is performed from the semantic representation of the query to find the most relevant documents. In this way, semantic search is a powerfully fast technique that relies on an embedding model, vector index, and similarity search.

Semantic Index for Microsoft Copilot

Semantic indexing creates a sophisticated “map” of user and corporate data. This map is formed by encoding and indexing keyword searches by user into a vector that combines the phrases, meanings, relationships, and context of the data. Microsoft recently announced a version of Copilot that incorporates semantic indexing to help Microsoft 365 Copilot learn more about your organization (privacy and data protection is respected), allowing it to better respond to user queries or prompts.

Vector Indexes

Vector indexes and databases are Natural Language Processing tools. These systems store documents and index them using vector representations, or embeddings, which allows for efficient similarity searches and document retrieval. There is a wide variety of products and features available in this space.

Image: Vectoring applied to Call Center to Measure Agent’s Level of Empathy During Customer Interactions

Hosted Foundation Models

A foundation model is a deep learning algorithm that has been pre-trained with extremely large data sets scraped from the public internet. Unlike narrow artificial intelligence (narrow AI) models that are trained to perform a single task, foundation models are trained with a wide variety of data and can transfer knowledge from one task to another. This type of large-scale neural network can be trained once and then fine-tuned to complete different types of tasks. Foundation models can cost millions of dollars to create because they contain hundreds of billions of hyper-parameters[1] that have been trained with hundreds of gigabytes of data. Once completed, each foundation model can be modified an unlimited number of times to automate a wide variety of discrete tasks.

Today, foundational models are used to train artificial intelligence applications that rely on natural language processing (NLP) and natural language generation (NLG). Popular use cases include ChatGPT and generative image platforms like DALL-E2.

Artificial General Intelligence (AGI)

No vocabulary list is complete without reference to AGI. The concept is controversial, but is the stated of goal of entrepreneur Sam Altman, CEO of OpenAI and developer of ChatGPT to achieve AGI as soon as possible. The concept of AGI is that artificial intelligence will eventually learn to accomplish any intellectual task that human beings or animals can perform. Hence, it’s referred to as general intelligence rather than specific intelligence. AGI is also defined as the point when an autonomous system surpasses human capabilities. Other definitions focus on the ability to have self-awareness, feelings, and the capacity to experience sensations and emotions.

The timeline for achieving AGI state is uncertain. Some AI theorists argue that it may be possible in years or decades, others maintain it might take a century or longer, and a minority believe it may never be achieved. Additionally, there is debate regarding whether modern deep learning systems, such as GPT-4, are early predecessors or if completely new approaches will be required to achieve AGI.

Conclusion

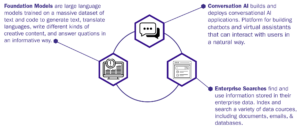

This list of technical terms associated with AI was just the tip of the iceberg. AI is a sophisticated space with vocabulary as advanced as the technology is transformative, and navigating the complex landscape of AI terminology requires a delicate balance between precision and comprehensibility. The journey to effectively discuss AI using advanced terminology involves understanding the nuances of key concepts, like Foundation Models, Conversation AI, and Enterprise Searches. In this ever-evolving AI landscape, mastering advanced AI terminology empowers professionals outside of the AI world to navigate the intricacies of technology to discover new implementations, fostering meaningful conversations and driving innovation.

[1] A parameter used to control the learning process. Different from a parameter determined by training the model. Similar to an “initial condition” in classical physics