There are many terms under the broad headline of Artificial Intelligence. This document will help you learn the most common terms and understand how to use these words the right way. Because AI is changing so fast, it is important to understand the vocabulary and context used today in order to absorb new terms that will be built on these foundational terms, and in the process, you will be able to avoid using old terms that are no longer applicable.

What is AI?

The term “artificial intelligence” (AI) is often used so broadly that it can be almost meaningless. It can refer to any technology that seems intelligent, from a simple chatbot to a self-driving car. The general understanding today is that AI is the area of technology where machines perform tasks that are so advanced that they appear to posses human intelligence.

AI is a broad field, and there are many different subfields within it. Some of the most common subfields include machine learning, natural language processing, and computer vision. Machine learning is a type of AI that allows machines to learn from data without being explicitly programmed to make a specific decision or take a specific action. Natural language processing is the ability of machines to understand and process human language. Computer vision is the ability of machines to see and understand the world around them.

What do we mean when we say AI, and where does the term fit?

While experts in the field can be critical of using the broad term AI for the varied technologies in the AI realm, there must be a term that non-experts can use to discuss machine intelligence. So, AI has become the short and easy way we refer to this complex field of technology. However, knowing that AI is not a single thing but a category of technologies, use this term in the plural, like AI technologies. Also, to communicate effectively, we should be specific about what type of AI we are referring to, like “self-driving AI” or “chatbot AI”.

We need to consider how we conceptualize AI because we don’t want to give it agency, and we need to remember who is ultimately responsible for the AI. Someone who casually says, “AI is doing this,” is not recognizing that someone created those AI systems and put them in place. Ultimately, the humans that create AI systems and use AI tools bear responsibility for the outcome of the experience. Humans, not machines, are responsible.

How you apply the term AI into other frameworks and how you think about AI conceptually is more important than the precise definition. For example, avoid the phrase “the AI did this” because it is important to be mindful that when we use AI, we are not interacting with a person but with a machine. AI is a tool, a means to perform tasks, it does not have independent thoughts or feelings (yet).

AI vs. Machine Learning (ML). Do they mean the same thing?

Machine learning is one category under the broad categories AI technologies, but the terms are frequently used interchangeably because many of the advancements in the field of AI are occurring in ML. The term machine learning can deflate the hype that can occur when the term AI is used indiscriminately, reminding people that there is real science behind AI magic. However, the term ‘learning’ can also cause people to believe that these systems are intelligent and can act on their own.

Machine learning systems (MLS) are software programs that are trained to make decisions or predict output based on data. They do not have any physical embodiment, and they cannot act on their own. MLS make predictions or decisions based on the models that have been used to train them. The results that come from the training, or “meta” programming, that allows these systems to make improvements on their interactions with very little instruction look a lot like human learning. However, there is a lot of human interaction that goes on behind the scenes to make that life-like experience possible.

Massive amounts of training data is fed into the algorithms that power MLS training models. These systems “learn” what fire hydrants look like because humans are identifying fire hydrants first in software applications that provide data to AI creators. Companies are employing thousands of people to click on images of fire hydrants, and their recorded responses are sold as the building blocks of these AI models. The AI factory turns human “rulemaking” into machine “decision-making.” Again, the appropriate context of an AI the term like Machine Learning is more important than its actual definition.

Here are a few more terms that are used frequently in AI conversations that are important to understand and apply in the right context.

Deep Learning (DL)

Deep learning is considered a subset of machine learning. This term is used to describe the machine learning that occurs when the computational model consists of several processing elements, typically called a neural network, designed to imitate how humans think and learn. With recent advancements in processing speed and data analytics, have allowed for larger, sophisticated neural networks. In these complex neural networks, processing nodes receive data, interact, and deliver outputs based on their predefined activation functions in a complex way that mimics the way a human brain detects patterns in large unstructured data sets. The term ‘deep’ refers to the large number of node layers in the neural network. With its ability to observe, learn, and react to complex situations faster than humans, deep learning can be used to solve pattern recognition problems quickly without human intervention.

Neural Networks

A neural network is a computational model (typically referred to as an artificial network) that is comprised of sub-components called node layers. These nodes use complex algorithms to process data then interact with each other with association weightings and thresholds using self-supervised programming logic (applying broadly defined commands rather than detailed subroutines). In general, a neural network generates outputs based on the significance of the relationship between the nodes. The process of “learning” that occurs in a neural network is exponentially faster than traditional data processing. Neural networks are the key component for leveraging extremely large data sets called large language models.

Large Language Models (LLM)

A large language model is a computerized language model incorporated into an artificial neural network. LLMs were birthed from the theory that the larger the data set, the more correlations can be drawn. The larger the amount of data, the faster the processing system needs to be. Modern LLMs are very fast and their speed comes from parallel processing vast amounts of unlabeled text, images, etc., and formatting the data into tokenized vocabulary with an estimated probability distribution. The word ‘large’ in LLM is really a subjective value. Technically, there is no scale between small and large; size is not as important as the “grounding” of the data. A good argument can be had to get rid of ‘large’ and just use the term language models.

Generative Pre-Trained Transformers (GPT)

Generative AI is a system that creates human-like content from a prompt. Content can include images, audio, text, computer code, etc. Generative AI is different from previous software in that the content it creates can seem unique or novel to the user versus a generic or canned response.

GPT and LLMs are the most popular terms associated with AI because of Microsoft’s partnership with OpenAI, the AI research and deployment company that built ChatGPT. GPT refers to a language prediction model, also known as a transformer. These learning models are trained on massive amounts of data called Large Language Models. ChatGPT is the name OpenAI gave to their natural language processing tool, while Google’s GPT is called Bard. While any term is bound to become obsolete as this technology moves rapidly forward, the name ChatGPT will likely linger on for some time because it was the first product of its kind to be announced to the public and makes for great storytelling.

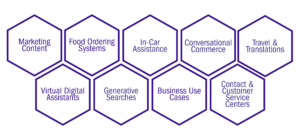

In other words, think of the GPT as the human interface, the neural network as the nervous system and the LLM as a universe of data. The combination of GPTs and LLMs allows a system to generate any type of text, translate languages, write different kinds of creative content, and answer questions in an informative way. The generative capabilities of these combined elements allow the answers to a prompt to be continuously refined without having to rephrase the question. There are a wide range of use cases for the combination of a GPT and LLM, such as:

Chatbots

A chatbot is a computer program that uses artificial intelligence (AI) and natural language processing (NLP) to understand customer questions and automate responses to them, simulating human conversation. NLP is the ability of a computer program to understand human language as it is spoken and written.

Hallucinations

“Hallucinations” is the term used when the response coming back from the GPT query includes mistakes. You enter a question, and the response comes back using the patterns programmed into the neural network, but the response is factually incorrect. Today, this happens frequently, and because the GPT replicates human interaction, hallucination is a convenient term to describe the phenomenon of incorrect responses, but it does not have the same meaning in this context as it does when it is used to describe human behavior. Machines do not hallucinate, they make mistakes. In the future, these errors may become harder to detect and even more closely associated with conscious perception-like experiences.

Summary

Artificial Intelligence and machine learning are the cornerstones of the next evolution of computing, so it is essential to understand the terms used to discuss these technologies. It doesn’t matter if ‘Artificial Intelligence (AI)’ is the perfect term for this category of technology; it is the term most widely used. AI is a broad category of technologies that provide tools for humans to accomplish complex tasks conveniently and efficiently. This makes it important to be specific about the type of AI technology when possible and to keep in mind the human drivers behind AI results. Consider using related terms like Large Language Models, Neural Networks, and GPTs, but be mindful of using the term in the right context. In the next article, we will review some of the more advanced terms that are used to describe the building blocks inside AI Engineering.